|

fpmas 1.6

|

|

fpmas 1.6

|

#include <server_pack.h>

Public Member Functions | |

| ServerPackBase (fpmas::api::communication::MpiCommunicator &comm, api::TerminationAlgorithm &termination, api::Server &mutex_server, api::Server &link_server) | |

| virtual api::Server & | mutexServer () |

| virtual api::Server & | linkServer () |

| void | handleIncomingRequests () override |

| void | setEpoch (Epoch epoch) override |

| Epoch | getEpoch () const override |

| void | terminate () |

| std::vector< fpmas::api::communication::Request > & | pendingRequests () |

| void | waitSendRequest (fpmas::api::communication::Request &req) |

| template<typename T > | |

| void | waitResponse (fpmas::api::communication::TypedMpi< T > &mpi, int source, api::Tag tag, fpmas::api::communication::Status &status) |

| void | waitVoidResponse (fpmas::api::communication::MpiCommunicator &comm, int source, api::Tag tag, fpmas::api::communication::Status &status) |

| virtual void | setEpoch (Epoch epoch)=0 |

| virtual Epoch | getEpoch () const =0 |

| virtual void | handleIncomingRequests ()=0 |

A Server implementation wrapping an api::MutexServer and an api::LinkServer.

This is used to apply an api::TerminationAlgorithm to the two instances simultaneously. More precisely, when applying the termination algorithm to this server, the system is still able to answer to mutex AND link requests, to completely determine global termination.

The wait*() methods also allows to easily wait for point-to-point communications initiated by either server without deadlock, by ensuring progression on both servers.

|

inline |

ServerPackBase constructor.

| comm | MPI communicator |

| termination | termination algorithm used to terminated this server, terminating both mutex_server and link_server at once. |

| mutex_server | Mutex server |

| link_server | Link server |

|

inlinevirtual |

Reference to the internal api::MutexServer instance.

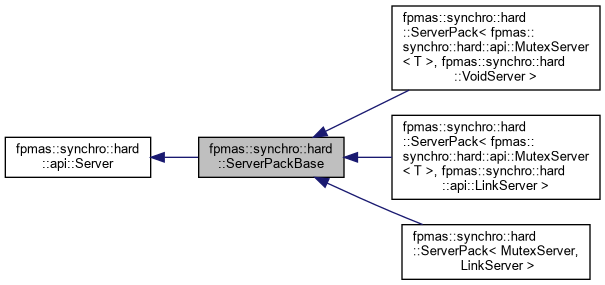

Reimplemented in fpmas::synchro::hard::ServerPack< MutexServer, LinkServer >, fpmas::synchro::hard::ServerPack< fpmas::synchro::hard::api::MutexServer< T >, fpmas::synchro::hard::VoidServer >, and fpmas::synchro::hard::ServerPack< fpmas::synchro::hard::api::MutexServer< T >, fpmas::synchro::hard::api::LinkServer >.

|

inlinevirtual |

Reference to the internal api::LinkServer instance.

Reimplemented in fpmas::synchro::hard::ServerPack< MutexServer, LinkServer >, fpmas::synchro::hard::ServerPack< fpmas::synchro::hard::api::MutexServer< T >, fpmas::synchro::hard::VoidServer >, and fpmas::synchro::hard::ServerPack< fpmas::synchro::hard::api::MutexServer< T >, fpmas::synchro::hard::api::LinkServer >.

|

inlineoverridevirtual |

Performs a reception cycle on the api::MutexServer AND on the api::LinkServer.

Implements fpmas::synchro::hard::api::Server.

|

inlineoverridevirtual |

Sets the Epoch of the api::MutexServer AND the api::LinkServer.

| epoch | new epoch |

Implements fpmas::synchro::hard::api::Server.

|

inlineoverridevirtual |

Gets the common epoch of the api::MutexServer and the api::LinkServer.

Implements fpmas::synchro::hard::api::Server.

|

inline |

Applies the termination algorithm to this ServerPack.

|

inline |

Returns a list containing all the pending non-blocking requests, that are waiting for completions.

Such non-blocking communications are notably used to send responses to READ and ACQUIRE requests.

Pending requests are guaranteed to be completed at the latest upon return of the next terminate() call, so that Request buffers can be freed.

It is also valid to complete requests before the next terminate() call, for example using fpmas::api::communication::MpiCommunicator::test() or fpmas::api::communication::MpiCommunicator::testSome() in order to limit memory usage, as long as this cannot produce deadlock situations.

|

inline |

Method used to wait for an MPI_Request to be sent.

The request might be performed by the api::MutexServer OR the api::LinkServer. In any case, this methods handles incoming requests (see api::MutexServer::handleIncomingRequests() and api::LinkServer::handleIncomingRequests()) until the MPI_Request is complete, in order to avoid deadlock.

| req | MPI request to complete |

|

inline |

Method used to wait for request responses with data (e.g. to READ, ACQUIRE) from the specified mpi instance to be available.

The request might be performed by the api::MutexServer OR the api::LinkServer. In any case, this methods handles incoming requests (see api::MutexServer::handleIncomingRequests() and api::LinkServer::handleIncomingRequests()) until a response is available, in order to avoid deadlock.

Upon return, it is safe to receive the response with api::communication::MpiCommunicator::recv() using the same source and tag.

| mpi | TypedMpi instance to probe |

| source | rank of the process from which the response should be received |

| tag | response tag (READ_RESPONSE, ACQUIRE_RESPONSE, etc) |

| status | pointer to MPI_Status, passed to api::communication::MpiCommunicator::Iprobe |

|

inline |

Method used to wait for request responses without data (e.g. to LOCK, UNLOCK) from the specified comm instance to be available.

The request might be performed by the api::MutexServer OR the api::LinkServer. In any case, this methods handles incoming requests (see api::MutexServer::handleIncomingRequests() and api::LinkServer::handleIncomingRequests()) until a response is available, in order to avoid deadlock.

Upon return, it is safe to receive the response with api::communication::MpiCommunicator::recv() using the same source and tag.

| comm | MpiCommunicator instance to probe |

| source | rank of the process from which the response should be received |

| tag | response tag (READ_RESPONSE, ACQUIRE_RESPONSE, etc) |

| status | pointer to MPI_Status, passed to api::communication::MpiCommunicator::Iprobe |